Data is growing faster than humans can realistically analyze it. Images, text, speech, sensor signals and behavioral logs pile up far beyond what manual analysis or rule-based systems can handle. Deep learning offers a way to make sense of this complexity by processing massive, unstructured datasets and extracting meaning at machine speed.

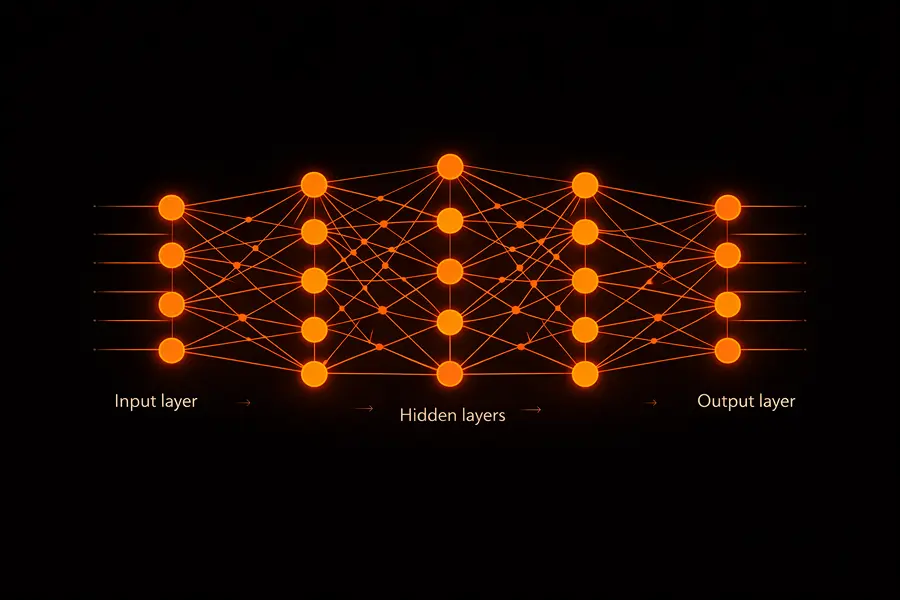

Modern deep learning systems are built on multi-layer neural networks — including convolutional neural networks, recurrent architectures and transformer-based models. These systems are able to detect patterns in images, understand human language, interpret audio signals and analyze time-series data with an accuracy that often surpasses traditional machine learning approaches.

What makes deep learning especially powerful is its ability to learn representations automatically. Instead of relying on hand-crafted rules or features, the model improves by learning directly from data. As new examples arrive, its predictions become more accurate, more robust and more aligned with real-world conditions.

Table of Contents

How Deep Learning Works in Practice

At its core, deep learning is inspired by how the human brain processes information. Rather than telling a system exactly what to look for, you provide examples and let the model discover patterns on its own.

A neural network takes raw input data and passes it through multiple internal layers. Each layer extracts increasingly abstract features, gradually filtering noise and highlighting what actually matters. The final layers then use this learned representation to make predictions or decisions.

In real-world applications, deep learning systems are particularly effective because they can:

-

learn directly from messy, incomplete or noisy data

-

identify subtle patterns in images, audio or text that humans often miss

-

solve tasks once considered too complex for machines, such as medical image analysis or real-time language translation

-

improve continuously as more data becomes available

Traditional machine learning often requires extensive manual feature engineering. Deep learning removes much of that burden, making it far better suited for high-dimensional, unstructured data. This is why it has become the backbone of tools people use every day — from recommendation engines and voice assistants to translation systems and computer vision applications.

The real advantage is scale. Deep learning systems process information in ways that resemble human perception, but they do so faster, more consistently and across volumes of data no team could handle manually.

Where Deep Learning Delivers the Most Value

As data grows more complex, the challenge shifts from storage to interpretation. Deep learning excels in environments where extracting meaning at scale is critical.

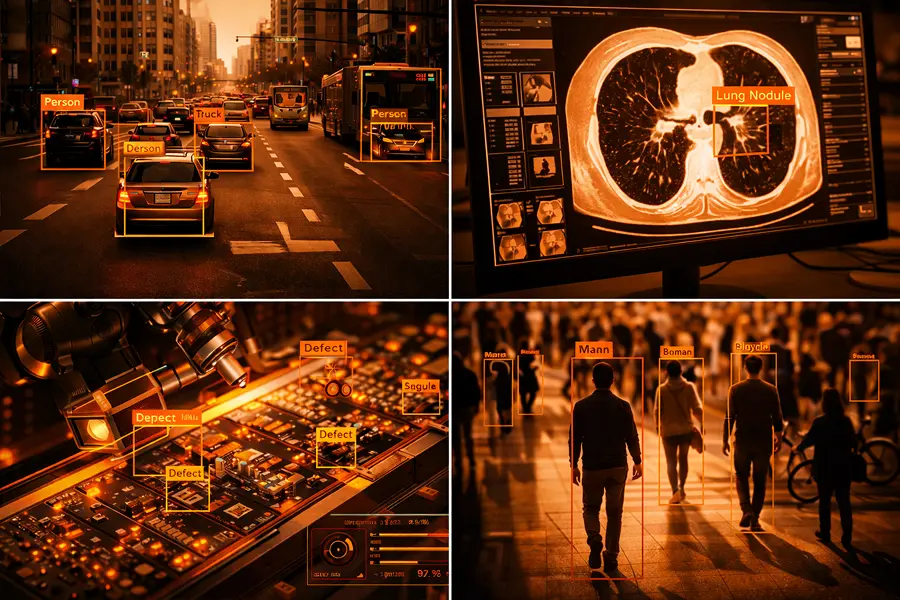

Computer Vision Systems

Deep learning models built on convolutional neural networks can analyze images and video streams with remarkable precision. They detect objects, classify scenes, identify anomalies and understand spatial relationships in real time.

Typical use cases include automated quality inspection, object detection in video feeds, medical image analysis and continuous monitoring in security or industrial environments. These systems are widely applied in healthcare, manufacturing, automotive, retail and security workflows.

Natural Language Understanding and Generation

Language is inherently ambiguous, contextual and messy — which makes it a natural fit for deep learning. Transformer-based models, large language models and recurrent architectures learn to interpret meaning, sentiment and intent directly from text and speech.

Common applications include sentiment analysis, speech-to-text systems, machine translation, document intelligence and content summarization. These capabilities power customer support platforms, internal knowledge systems and voice-driven interfaces across many industries.

Predictive Modeling and Forecasting

Deep learning models are especially effective at capturing non-linear relationships and hidden dependencies in historical data. This makes them well suited for forecasting and risk assessment in noisy, real-world environments.

Typical scenarios include fraud detection, demand forecasting, market trend analysis and behavioral risk scoring. These models help organizations anticipate future outcomes rather than simply reacting to past events.

Generative and Synthetic Data Models

Generative models such as GANs and diffusion models can create new data rather than just analyzing existing information. They are used to generate images, text or synthetic datasets that support training and simulation.

These approaches are particularly valuable in domains where real data is scarce, expensive or sensitive — such as medical imaging, robotics simulation or advanced R&D environments.

Domain-Specific Deep Learning Architectures

Not all problems can be solved with off-the-shelf models. In complex or highly specialized environments, custom architectures combine convolutional, recurrent and transformer-based layers to match specific data characteristics.

These systems may work with industrial sensor data, multimodal inputs or on-device inference for edge computing. They are commonly used in manufacturing, energy, telecom, logistics and biotech — anywhere standard models fail to capture the full picture.

What It Takes to Build a Deep Learning System

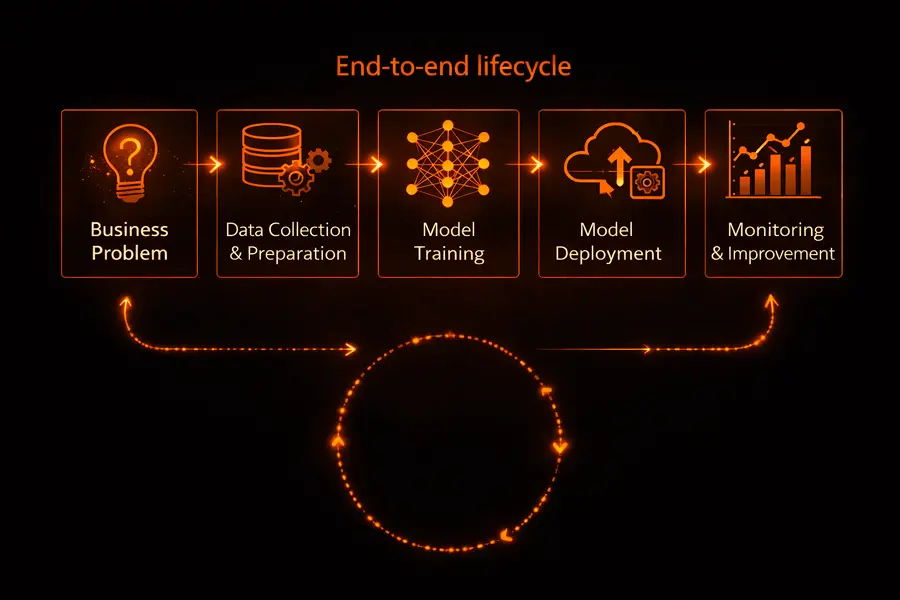

Building a deep learning system involves far more than training a model. It requires a structured process that turns raw data into a reliable, production-ready decision engine.

Data Strategy and Preparation

Every project starts with a fundamental question: is the available data suitable for training? Raw datasets often contain noise, imbalance, missing values or inconsistencies that can mislead a model.

Preparing data means cleaning, annotating, structuring and validating it so the model learns the right signals. A strong data pipeline leads to faster training, more stable performance and fewer surprises after deployment.

Model Design and Architecture

The choice of architecture depends entirely on the problem: images, text, sequences, signals or combinations of all three. Designing the right structure also involves balancing interpretability, performance and operational constraints.

At this stage, decisions are made about how the model integrates with existing systems and how well it can adapt as business requirements evolve.

Training and Optimization

Training is where the model learns to generalize beyond the training data. Techniques such as regularization, learning-rate scheduling and architecture refinement help prevent overfitting and improve robustness.

Well-trained models handle real-world variability gracefully rather than breaking when conditions change.

Deployment and Integration

A useful model must operate reliably in production. This involves building inference pipelines, wrapping models in APIs and optimizing them for latency and scale — whether in the cloud, on mobile devices or at the edge.

The goal is not a standalone prototype, but a stable component embedded in real operational workflows.

Monitoring and Continuous Improvement

Deep learning systems evolve over time. Data distributions shift, user behavior changes and performance can degrade if models are left unattended.

Ongoing monitoring tracks accuracy, drift and confidence levels, triggering retraining or fine-tuning when needed. This continuous loop ensures long-term alignment with real-world conditions.

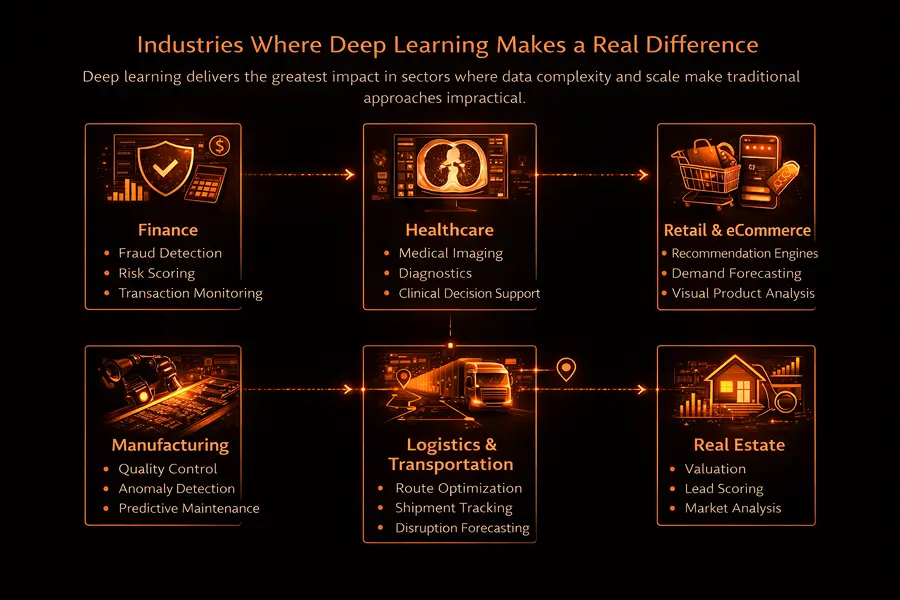

Industries Where Deep Learning Makes a Real Difference

Deep learning delivers the greatest impact in sectors where data complexity and scale make traditional approaches impractical.

- In finance, models support fraud detection, risk scoring and transaction monitoring.

- In healthcare, they assist with medical imaging, diagnostics and clinical decision support.

- In retail and eCommerce, deep learning powers recommendation engines, demand forecasting and visual product analysis.

- In manufacturing, it enables quality control, anomaly detection and predictive maintenance.

- In logistics and transportation, models optimize routes, track shipments and forecast disruptions.

- In real estate, deep learning supports valuation, lead scoring and market analysis based on unstructured data.

Across all these industries, the common thread is the need to extract reliable insights from complex information streams.

Final Thoughts

Deep learning has moved well beyond experimentation. Today, it underpins systems that analyze images, understand language, forecast outcomes and automate decisions at a scale no human team could manage.

When applied thoughtfully, deep learning transforms raw data into actionable insight — not through rigid rules, but through systems that learn, adapt and improve over time. For organizations dealing with complexity, volume or unstructured information, that capability can become a lasting competitive advantage.

Understanding how these systems work and where they truly add value is the first step toward building intelligent, future-ready solutions.