Organizations are being pushed to make real decisions about agentic AI sooner than planned. By 2025, over half of organizations are actively exploring AI agents, and roughly two-thirds of senior leaders say their agentic initiatives are already producing measurable business value. At the same time, adoption is moving faster than most teams can comfortably manage, especially outside engineering-heavy environments.

That tension is exactly why an agentic AI proof of concept matters. This guide explains how to use a PoC as a controlled business test rather than a technical showcase. It breaks down how agentic AI PoCs work, why companies rely on them to reduce risk, and how leaders can evaluate results using real performance signals such as time saved, errors reduced, and decisions improved rather than assumptions or hype. The emphasis stays on practical outcomes: what the AI can handle on its own, where human oversight is still required, and whether the evidence supports scaling further.

Table of Contents

Introduction: Why Agentic AI Proof of Concepts Matter

Before rolling out AI agents across an entire business, teams need to verify that they can actually work in real-world conditions, not just in theory. An agentic AI PoC is a short, controlled test designed to answer one practical question: can an autonomous agent deliver value inside a real workflow without introducing new risks or operational friction?

At this stage, it also helps to be clear about the types of AI agents being tested, since different agent designs behave very differently once they interact with live systems, data, and constraints.

Rather than trying to tackle big transformation goals all at once, an agentic AI PoC zeroes in on a super-narrow slice of work. It can be routing tickets, reviewing documents, updating systems, or even helping to make decisions. Then it checks how an AI agent would really behave under real-life constraints. It lets you spot any weak spots that might come up, for instance, data issues, weird edge cases, governance limits, and all the integration headaches.

That’s why proof of concepts are such a big deal in areas like finance, healthcare, logistics, and e-commerce. They let you figure out what the real impact, risks, and effort will be before you commit to scaling up. Done right, a proof of concept trades in assumptions for hard evidence and turns agentic AI from some pie-in-the-sky idea into something you can really put to the test.

What’s the point of an AI PoC?

An AI Proof of Concept (PoC) is a focused, time-limited experiment testing whether a specific artificial intelligence system, model, or agent can reliably perform a particular business task. The goal is to validate if the idea is worth pursuing before significant investment. In agentic AI, a PoC tests whether an agent can plan, accomplish tasks, and adapt within real workflows.

Key areas a PoC looks at

An agentic AI PoC is all about two main things:

Can it work technically?

Can the agentic AI actually get the job done accurately and consistently? This involves testing large language models, predictive maintenance agents, or other agentic AI components against real-world inputs and edge cases. In other words, it checks the things that can trip up a system.

Can it deliver some real value?

Does the artificial intelligence actually deliver some tangible benefits, like time or cost savings, reducing human bottlenecks, or making better decisions?

How a PoC works

Think of a PoC as a controlled experiment space. It’s all about focusing on a single operation or multi-step task, using high-quality or simulated data, and trying to simulate the conditions of the real world without risking sensitive information or disrupting operations.

Some typical characteristics of a PoC:

- Narrow focus, tackling just one specific business problem

- Runs for just 4-8 weeks, so you can get some quick results

- Measures outcomes like task success, time saved, and all the human intervention that’s needed

- Examines how the agent behaves to spot any gaps, errors, or limitations

And a PoC also reveals any potential vulnerabilities that might have been hiding in the shadows, such as incomplete data, fragmented workflows, integration problems with enterprise systems, or edge cases that artificial intelligence can’t handle.

How a PoC differs from other early-stage options

- Prototype: All about the user experience and interface

- MVP: Delivers a minimal but production-ready version

- PoC: Proves it can work and actually deliver some value. The key question is: “Can this AI agent reliably get the job done and produce some real value?”

A PoC does not require all the features of a complete system, such as multiple user support, parallel operations, version control, or audit logs. Its purpose is to test performance and value in realistic conditions. When done right, it provides insights, reduces uncertainty, builds confidence, and guides decisions on rolling out agentic AI to the business.

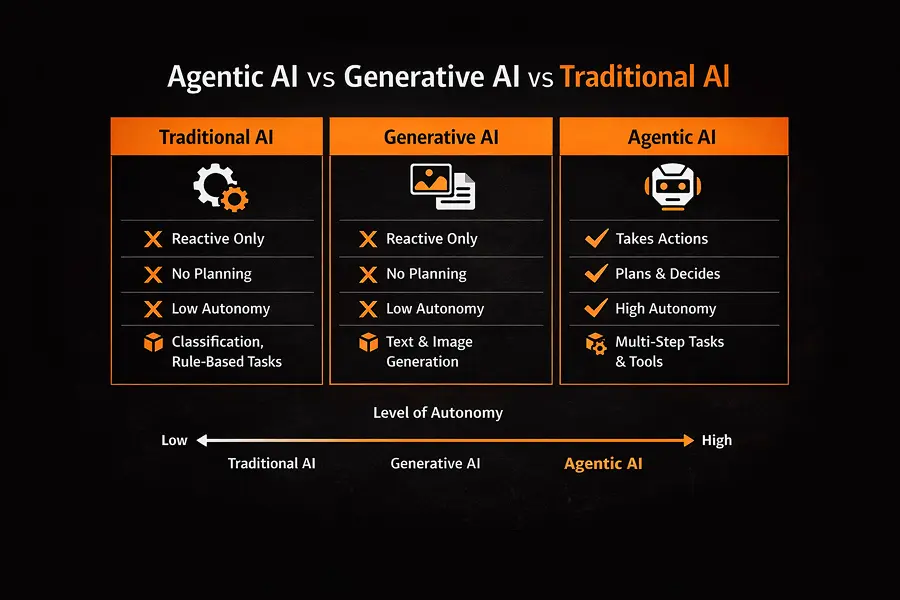

How Agentic AI Differs from Traditional AI

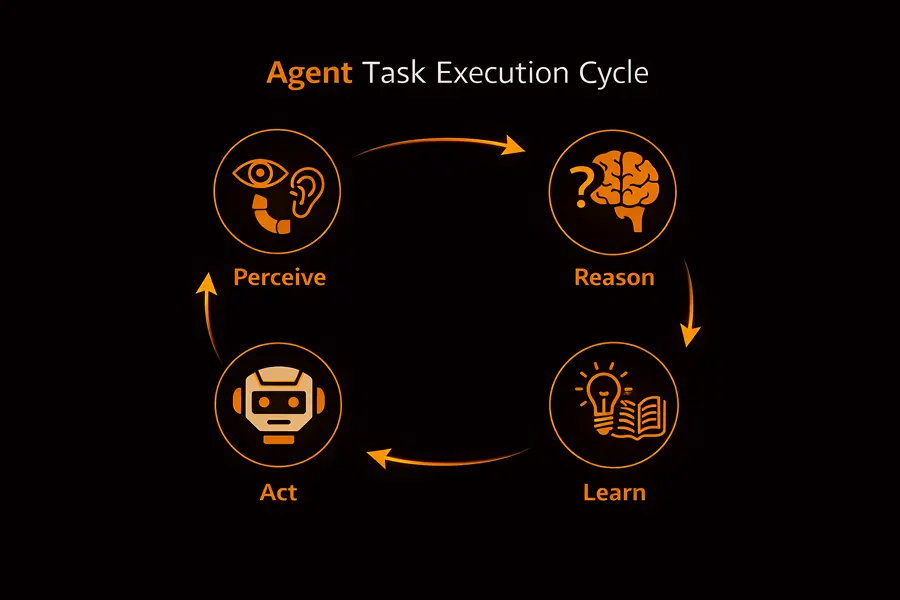

Before diving into metrics and results, it helps to be clear about what is actually being tested. A PoC for agentic AI is not evaluating how well a system responds to prompts. It is assessing whether an AI system can take responsibility for completing work, step by step, inside real business processes.

| AI Approach | Primary Role | Practical Limitation |

|---|---|---|

| Chatbot | Answers questions using natural language | Cannot take actions or interact with enterprise systems |

| LLM-powered workflow | Executes predefined, fixed sequences of steps | Breaks when inputs or conditions change |

| Agentic AI | Defines goals, decides next actions, uses tools, and interacts with enterprise data to complete complex workflows | Requires governance, monitoring, and clear boundaries to manage autonomous behavior |

This difference is why agentic AI PoCs look very different from traditional AI pilots. They validate execution, coordination, and decision-making under real conditions. The question is no longer “Does the model respond well?” but “Can the system reliably carry work to completion without constant human intervention?”.

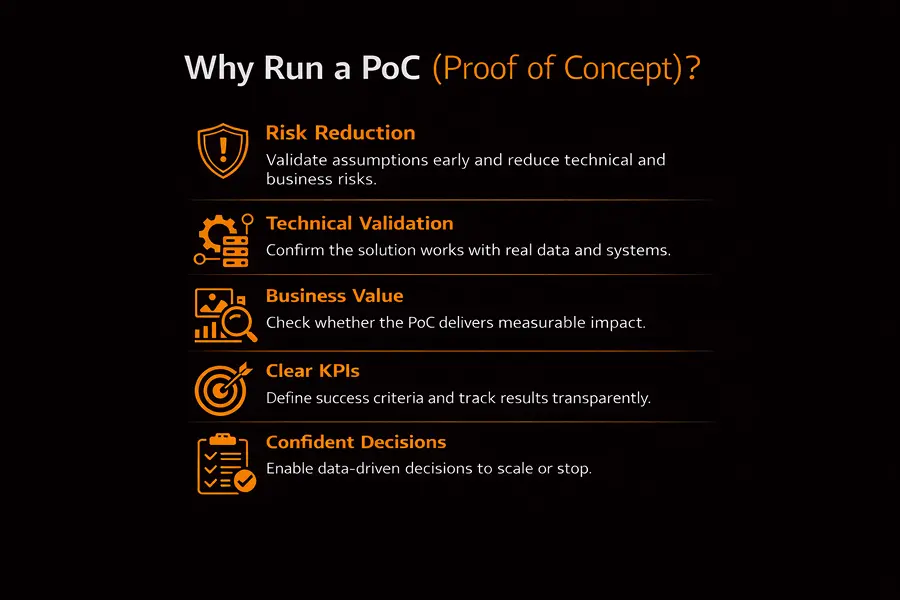

Why Run an AI Agent PoC?

Business users typically run an AI agent PoC for one overarching reason: they want clarity before they scale. A controlled experiment lets them see how the idea behaves in the real world, not in a slide deck.

Reducing Uncertainty

Agentic AI systems can feel unpredictable, especially when they interact with noisy data or multi-step internal processes. A PoC keeps everything contained. It becomes a safe environment where teams can observe how the agent reasons, fails, recovers, and handles exceptions without risking production operations.

Protecting the Budget and Avoiding Rework

Long projects hide expensive surprises. A PoC exposes them early. Some ideas turn out to be simple; others reveal hidden dependencies, missing data, or edge-case explosions. By uncovering this upfront, companies avoid spending six figures on a system that would have needed re-architecting later.

Demonstrating Tangible Value

Decision-makers want evidence, not promises. A PoC produces numbers that are hard to ignore:

- time saved in a single workflow

- accuracy gains compared to the manual baseline

- faster task resolution

- fewer handoffs or delays

Even a small improvement can justify a full rollout when multiplied across months of operations.

Getting Stakeholders Aligned

Different teams see the same workflow through different lenses. Data teams care about inputs, technical teams care about security and integrations, and operations care about reliability. When everyone watches the same small working system, alignment becomes faster and much less abstract.

Building a Roadmap That Makes Sense

A PoC also reveals the truth about what should come next. Some parts of the workflow are ready for automation right away; others need redesign. Over time, these experiments help organizations ramp up their AI maturity step by step, without the chaos of a massive transformation effort.

How Agentic AI Is Changing What a PoC Needs to Prove

An agentic AI system shifts the focus of a PoC from response quality to task execution. Instead of judging model accuracy or language ability, teams evaluate whether an agent can run a workflow autonomously, coordinate steps across systems, and produce outcomes that can be measured.

From Chat-Based to Real-World Use Cases

Up until now, AI PoCs were all about the quality of the conversation. That made sense when systems were mainly just chat-based. Agentic AI flips that on its head.

An agentic AI PoC can evaluate agent behavior by seeing how well it reviews a loan application, gathers information from multiple systems, and flags missing or problematic data before submission. The value isn’t in the words the agent uses to respond, but in how much of the back-and-forth is taken out of the process. What used to take days can now be done in minutes.

The whole point of an agentic AI PoC is to show whether an agent is actually capable of moving a workflow forward.

From Doing a Good Demo to Actually Changing the Business

With agentic artificial intelligence, a slick-looking demo isn’t enough anymore. Teams want to see the numbers.

In fraud detection, for example, agents can be constantly on the lookout for suspicious patterns across multiple systems, and only bring in human reviewers when something really does look suspicious. A PoC here isn’t about explaining all the rules and regulations; it’s about proving that fewer cases are getting sent to human reviewers, there are fewer false positives, and things are getting replied to quicker.

This shift also makes it clearer to business users why resources are being allocated to AI, as the focus is on measurable outcomes rather than abstract capabilities.

From One Model to a Whole Team of Agents

Agentic PoCs often test small teams of agents working together rather than relying on a single model to do everything. In insurance, for example, one agent might gather initial loss details, another classifies the claim, and a third decides whether it can be processed automatically or requires human intervention.

These agent configurations reveal where coordination breaks down, where human validation is necessary, and how much oversight is required. These insights are difficult to gain from theoretical analysis alone.

From Writing Code to Using the Right Tools

Agentic PoCs also change the way teams think about actually getting the implementation done.

Rather than just hardcoding in the logic, agents are tested on their ability to use whatever tools and systems are already in place. In logistics, for example, one agent might be responsible for rerouting shipments when the weather gets in the way, while another selects the best carrier based on past performance and cost. The PoC isn’t about whether the model did all the work itself; it’s about whether the agent can access the right systems, make decisions with maybe not very much data, and still be able to recover when something goes wrong.

It’s no longer mainly about the model at its core; you’re more interested in how well it’s working inside the real infrastructure.

From Avoiding Risk to Managing It

Agentic PoCs don’t ignore risk. They make it visible and manageable.

In banking, autonomous agents track exposures in real time and estimate default risk. In healthcare, they speed up structured imaging reports without lowering clinical standards. These PoCs are designed with approvals, logs, and fallback paths from day one. Autonomy is managed through clear boundaries, auditability, and the ability to intervene when needed.

Across industries, PoCs are where intelligent systems become practical. One global industrial firm uses agents to spot equipment issues early and trigger maintenance before failures happen. The payoff is less downtime and visible cost savings, not just better forecasts.

Choosing the Right Agentic AI Proof of Concept

Agentic AI PoCs go wrong when the scope gets defined at the “Idea” level, rather than what needs to get done. Without a clear boundary, it’s pretty much impossible to tell if you’ve succeeded or failed.

A PoC’s purpose is to test one process at a time. The goal isn’t to explore all the possibilities. In fact, it’s to figure out if having an agent improves execution in a specific, measurable way.

Step 1: Define the Work, Not the AI

First, we need to look at the task itself and not the agentic AI that’s going to tackle it. Take a close look at how the entire process is done today, where it slows people down, and what tasks could be improved.

Agentic AI is most effective in workflows that involve repetition, handoffs between systems, or decisions based on patterns rather than just good old-fashioned judgment. Most agentic AI PoCs fit into one of four very defined categories.

- Knowledge-driven work involves reading, extracting, and getting information into shape. This includes internal research, reporting, reviewing documents, and structuring unstructured data. This is where strong reasoning and retaining context are a huge advantage.

- Operational work is all about processes. Updating records, routing requests, scheduling repetitive tasks, or syncing data between systems are all typical examples. These tasks suit agents that can execute steps reliably but still respond when inputs change.

- Decision-oriented work includes prioritisation, approvals, and risk scoring. Here, the AI agent either supports the decision-making or executes it with some rules in place, and escalation if needed.

- Planning or creative work focuses on drafting, outlining, or generating options. In these cases, workflows rely on context awareness and adaptability rather than strict automation.

When starting out, pick one category. Trying to mix several in one PoC will make outcomes harder to evaluate and defend.

Step 2: Match the Agent Type to the Workflow

Once the work is clear, the autonomous agent’s choice follows naturally.

- A research agent fits workflows that require analysis, synthesis, and insight generation. It pulls from multiple sources, producing outputs that are nice and structured.

- An automation agent is built for consistency. AI agent executes predefined steps, updates systems, removes manual effort from repeatable processes, and does it all on a regular basis.

- Predictive maintenance agent monitors equipment or systems, anticipates failures, and triggers proactive actions. It’s ideal for industrial or supply chain management processes where downtime is costly.

- A decision-support agent evaluates inputs, ranks options, or flags risks. It may act independently in low-risk cases and escalate when thresholds are crossed.

- A multi-agent setup is useful when a workflow spans multiple systems or teams. One agent plans, another executes, and a third validates the results. This adds power but also coordination overhead. It is rarely the best starting point.

Getting this wrong leads to misleading results. The PoC might fail even if the underlying idea is sound.

Step 3: Define Autonomy Boundaries Upfront

Autonomy should be deliberate, not taken for granted.

In an assisted setup, the agent suggests actions but waits for approval. This is great for early testing or sensitive data processing.

A controlled setup allows the agent to act within defined boundaries. Routine cases go ahead automatically. Exceptions trigger escalation.

In a supervised setup, the agent operates independently and logs every action. Reviews happen after execution. This works best for low-risk, high-volume tasks.

Granting too much autonomy too soon increases risk, while being overly restrictive limits measurable value. The optimal balance depends on the potential cost of errors.

Step 4: Keep Scope Realistic for Time and Cost

PoCs move fast, but they are not lightweight experiments. They need system access, integration, and evaluation time to give you a true picture.

As a rough guide:

- Research-focused agents tend to run a bit shorter and cost less.

- Automation agents require more setup due to system dependency issues.

- Decision-oriented agents need longer validation to build trust.

- Multi-agent systems demand the most planning and testing.

Step 5: Establish Clear Ownership for Autonomous Agents

A PoC without clear ownership will drift.

One person needs to define the objective and what success looks like. Someone with domain knowledge has to validate assumptions and quality data. Technical access and security need to be tackled upfront. Finally, results need to be evaluated honestly – including failure modes.

When these roles are clear, PoCs stay focused and produce some real insights that inform next steps.

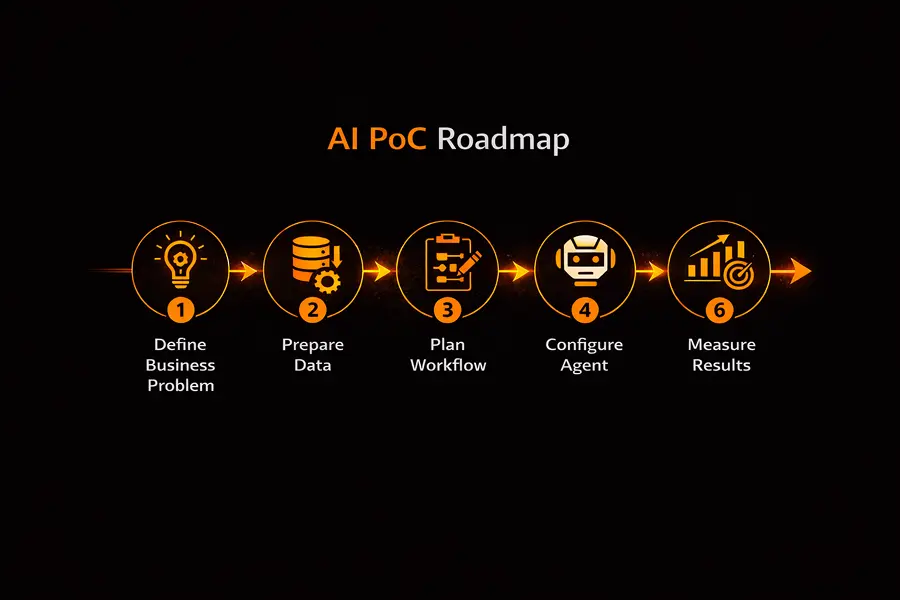

Building a Practical AI Proof of Concept for Agentic Systems

A real AI PoC isn’t just about cobbling together some tools. It’s a controlled experiment designed to find out if an autonomous agent can actually make a difference in a real business process. The whole point is to keep things focused and the results usable. That is why the following steps are set up like this:

1. Pin Down a Business Problem

Start by tackling a single workflow, not a whole department, and not some lofty vision.

Be really clear on:

- What specific task is the agent going to handle?

- How is that task handled at the moment?

- What would count as success, with some actual numbers to back it up?

We’re talking about targets like cutting down processing time, slashing error rates, or just plain reducing the amount of manual effort needed. This keeps the PoC grounded and actually gives you something to measure.

2. Ensure Data Readiness and Access

An agent is only going to be as good as the inputs it gets.

Use real data where possible, but make sure you’ve got it isolated from the main production system. Then set up some test cases that cover all the different scenarios: the ones where it all goes wrong, the ones where you give it some dodgy data, and the edge cases too. This is often where weak data readiness or unclear logic is hiding.

3. Map Out the Workflow

Draw a clear picture of the whole process from start to finish: inputs, steps, decisions, tools, and outputs.

This forces you to get clear about what the agent is actually supposed to be responsible for, and where human oversight is needed. More importantly, it stops “silent failure modes” where the agent does all the right things but ends up producing something that’s completely useless.

4. Set Autonomy and Safety Nets

Decide just how much freedom you’re going to give the agent to operate on its own.

Define where high-risk actions need approval, how far you want the agent to be able to go on its own, and what you want to happen if it all goes wrong. And make sure you’re logging all the AI-driven decisions and actions, so you can review them all later.

5. Only Connect the Bits That Matter

Connect the agent to the systems it actually needs to talk to, like CRM, ticketing, docs, and analytics.

Don’t go overboard with the integrations. Every extra system you add just makes things more complicated without actually improving the signal you’re getting from the PoC.

6. Run It and See How It Goes

Test it all with some real-world scenarios, including all the messy inputs and the ones that go wrong.

Track where the agent actually does a good job, where it slows down, and where you need to step in to help.

7. Measure Up and Decide

Evaluating results using actual business metrics, not just the numbers that come out of the model.

Look at things like task completion rates, time saved, cost per task, and operational impact. And document what worked, what failed, and why.

A good PoC does more than just prove that something works. It also gives you a clear idea of whether it makes sense to scale up, and what you’re going to need to change first.

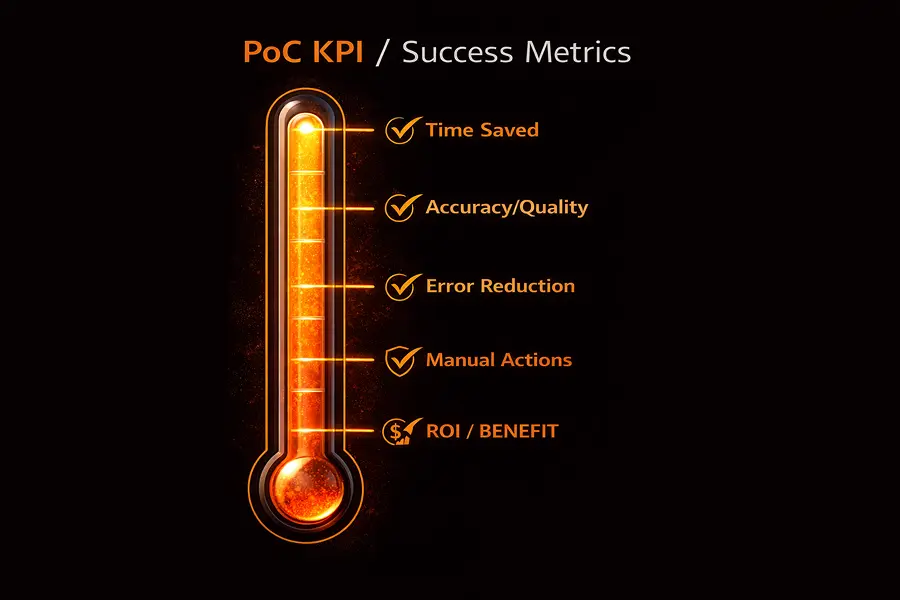

Keys to a Successful Agentic AI PoC: Ensuring Business Impact and Data Readiness

A good PoC should focus on workflows where we can easily measure the impact. That might be cutting processing time, reducing errors, speeding up resolution, or cutting down on manual effort. If we cannot measure the results, then the PoC won’t be able to prove its worth.

Safety and compliance aren’t just ‘nice to haves’. They have to be built right in from the start. Even small experiments can touch sensitive data or regulated processes. So we need to have our approval steps, action limits, and audit logs all sorted before we even start. It’s the only way to prove that the solution works in the real world.

Clarity is just as important as performance. A useful PoC should make it obvious where the AI can handle things on its own and where human intervention is still needed. That way, we can avoid getting too carried away with the tech and make sure we’re being realistic about our planning.

Finally, a good PoC needs to give us a clear next step. That might be scaling up the workflow, adjusting the boundaries, or just deciding to stop the experiment altogether. If the outcome is too ambiguous, then the PoC probably hasn’t been well-scoped.

Capabilities That Really Matter For A POC

A Proof of Concept needs to be judged on what it actually does, not just what it claims to do. We should be looking at the capabilities that decide whether an agent is actually useful in real-world jobs.

From Start to Finish: End-to-End Task Execution

The agent needs to be able to handle a task from start to finish without needing to go back to you constantly. That means breaking work into manageable chunks, using the right tools for the job, and, hopefully, completing the whole process without needing constant human intervention.

Built-in Checks and Balances: Validation

A good agent will check its own work, catch any inconsistencies that might arise, and pause or send the issue to someone for review if it’s not confident in its output. This is how errors get contained right from the start.

Tracking Every Step: Full Observability

Every single action the agent takes should be traceable. You and your team should be able to see what the agent did, in what order it did it, and why it made those decisions. Without this level of transparency, it’s almost impossible to diagnose failures and build trust with the agent.

No Mocking Around: Real System Integration

The AI agent needs to work with actual, real-world systems – not just some artificial data or isolated demo. If it can’t do that, then the POC is never going to give us a true picture of how it will actually perform in the real world.

Don’t Give the Agent a Free Pass: Governance Controls

Autonomous systems need rules to keep them in check. Any high-risk actions should need approval, boundaries need to be set before they start, and there needs to be backup plans in place in case the agent does something wrong.

Measuring Success: Evaluation

When we’re reviewing a POC, we need to be able to measure its success against clear, real metrics like how many tasks it can complete, how much time it saves, how many errors it reduces, and what the cost per task is. These numbers are what decide whether the POC makes the cut or gets stopped.

If these capabilities are missing from a POC, it may look impressive, but it won’t give you any useful decisions you can actually act on.

Practical Challenges to Watch Out For

Even when you’ve done everything right, well-scoped PoCs can still run into trouble:

- You might find that there’s not enough quality data or that the inputs are inconsistent.

- The AI models might start behaving in unexpected ways.

- Integrating with existing systems can be slow and fragile.

- Security and access get in the way.

- Some teams might be resistant to automation.

- The PoC setup might not be the same as the production environment.

If we can anticipate these issues, we can make it a lot easier on ourselves later on.

Conclusion

Agentic AI PoCs aren’t tech demos. They’re business tests built around a single question: does letting a system act on its own actually improve a specific workflow in a measurable way?

When the scope is right, a PoC produces evidence instead of assumptions. It shows where work gets faster, where costs drop, and where human oversight still matters. It also surfaces risks early, before autonomy reaches critical systems. In that sense, the PoC becomes a decision tool rather than a leap of faith.

Cost clarity matters here as well. Understanding the cost to build an AI agent helps teams judge whether the results justify scaling, adjusting the boundaries, or stopping altogether.

A focused PoC replaces hype with real-world validation. The outcome is straightforward: scale, refine, or walk away. And if you’re looking to validate an agentic AI use case with clear metrics, controlled risk, and a defined path to deployment, ProductCrafters can design and run agentic AI PoCs that stay anchored in real business impact.